Real estate data startup cuts web scraping AI time from 40 to 3 days

By Gabriel Marchelli, Startup Solutions Architect at AWS; Bruno Vilardi, Solutions Architect at e-Core and AWS Community Builder; Matheus Gonçalves, Data Engineer; and Ricardo Johnny, Cloud Architect at EEmovel.

The Client

EEmovel is a technology startup founded in 2014. It offers data-driven solutions for the real estate market. Its tools are designed to support decision-making for a range of business sectors, including real estate agencies, financial institutions, large retailers, construction firms, developers, and agribusiness companies.

The startup stands out for supporting the urban real estate market through a SaaS platform called EEmovel Brokers. With a lightweight, intuitive interface, the platform allows brokers and agencies to locate properties for acquisition, perform valuations, and manage their portfolio of assets. The startup leverages massive datasets, featuring over 30 million active listings that are constantly updated.

Another key area for the startup is agribusiness with EEmovel Agro, a Market Intelligence platform that maps more than 7.5 million rural properties. The platform provides detailed information on crops, planting history, technical soil data, elevation, slope, and more. Additionally, it offers insights into property owners and potential leaseholders for agribusiness clients.

The company also offers valuations for rural and urban properties, as well as tools to determine ideal locations for new businesses, especially in the retail sector.

The Challenge

EEmovel performs extensive web scraping across a wide variety of real estate websites to obtain constantly updated property information (such as price, area, location, and other key attributes). The company scrapes data from approximately 11,000 real estate websites, resulting in 20 million listings per day.

Despite the broad reach and relevance of the collected data, the current process faces major challenges. The scraping methodology is largely manual and requires ongoing script maintenance, as frequent changes to real estate websites demand constant adjustments. This makes the process not only time-consuming but also costly and error-prone.

One of the biggest issues EEmovel faces is the high failure rate of its scripts. On average, 100 scripts break daily, far exceeding the capacity of the team responsible for fixing them. This issue is especially pronounced with websites from larger agencies, which often use custom designs and unique structures, making automation harder and requiring tailored code for each one. On average, a team member takes around 8 hours to fix a broken script, including identifying the error, implementing the fix, and validating the updated code.

Although the company has been successful in automating processes for simpler websites, more complex ones still require the involvement of multiple team members, significantly increasing operational costs. Maintaining these custom automations, coupled with the constant need for adaptation, makes the data collection process highly challenging and financially burdensome.

This scenario highlights the urgent need for significant improvements to the web scraping process, whether by building more robust and automated solutions or by adopting new technologies that can reduce human intervention and boost both efficiency and scalability.

Solution

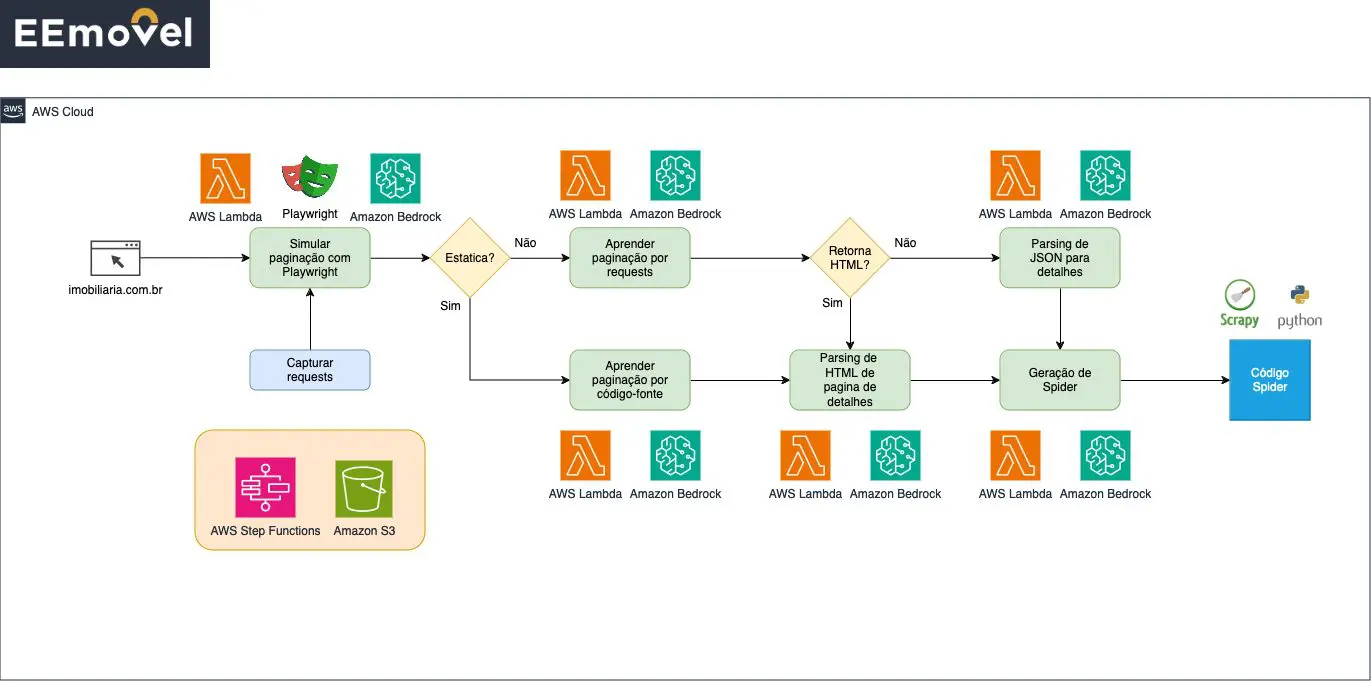

To address this challenge, EEmovel partnered with e-Core, an AWS Advanced Tier Services Partner, alongside the AWS team and EEmovel’s internal staff to develop the solution. An intelligent, automated architecture was built using AWS technologies and specialized scraping tools like Playwright and Scrapy. The goal was to optimize data collection and reduce manual maintenance by combining AWS services such as AWS Lambda (for running functions), Amazon Bedrock (a fully managed generative AI service offering multiple foundation model options), and supporting services like AWS Step Functions (for serverless orchestration of modern applications) and Amazon Simple Storage Service (Amazon S3) for object storage.

A key innovation in the architecture is the use of Amazon Bedrock (leveraging Anthropic’s Claude 3.5 Sonnet model) to accelerate the creation and correction of Python-based spider scripts. The Large Language Model (LLM) receives a prompt, generated in a previous step, using pre-defined code templates. After the LLM creates or corrects the code, it’s passed to a human reviewer for validation and any necessary adjustments before being deployed. In essence, Amazon Bedrock can adapt to website changes by learning new patterns and adjusting scraping logic as needed, reducing broken scripts and improving overall efficiency.

Additionally, the use of AWS Lambda functions enables the system to scale automatically and handle massive volumes of data, processing up to 15 million listings per day as needed. These functions are orchestrated using AWS Step Functions.

The architecture, as illustrated in the solution diagram, begins with capturing HTTP requests directly from real estate websites, simulating pagination using Playwright. This simulation is critical because many modern websites use dynamically loaded content that requires JavaScript interaction to retrieve complete data.

After capturing the requests, the solution takes two main paths, depending on whether the site is static or dynamic:

- For static websites, pagination structures can be inferred directly from the HTML source code. Once the pattern is identified, the data is collected directly, simplifying the extraction process.

- For non-static sites, the system learns the pagination logic from the captured requests. If the site returns HTML, the page code is parsed to identify property data. Otherwise, if the response is in JSON, the system extracts the relevant details from it.

Regardless of the path, the solution automatically generates spider scripts using the Scrapy framework combined with Python. This approach produces customized code for each site, eliminating most of the need for manual intervention.

With this new approach, EEmovel significantly reduced the manual effort and cost associated with script maintenance. Additionally, the response time to website changes has improved, as the automated architecture can detect and resolve scraping issues much more quickly.

The ability to scale the process to accommodate the diversity of websites, without manually adjusting each script, ensures a smoother, more efficient operation. Ultimately, this allows EEmovel to focus on analyzing collected data and developing valuable insights rather than allocating resources to repetitive script maintenance.

This transformation of EEmovel’s scraping process, powered by cutting-edge technologies like Playwright, Scrapy, Lambda, and Amazon Bedrock, has introduced a new level of automation and efficiency. With the ability to generate adaptive spiders and automatically learn from website changes, the development team can focus on higher-value tasks while the system adjusts in real-time, ensuring accurate and up-to-date data collection.

Results

EEmovel achieved outstanding results with the implementation of the new architecture developed with the support of e-Core and AWS.

The architecture enables the simultaneous creation and correction of multiple scripts, speeding up the adaptation process to site changes. Previously, expanding into a new city with 200 real estate websites required a five-person team developing five scripts per day, taking about 40 days to complete all 200 scripts. With the new solution, the same team can now develop 80 scripts per day, completing the same task in just 2.5 days. This allowed for much faster expansion while maintaining delivery quality.

In terms of operational cost, expanding into a city with 200 sites, previously costing $3,600, can now be done for just $800 using the new architecture.

The solution also drastically reduced script correction time, from 8 hours to just 30 minutes using Amazon Bedrock. The optimized process now generates new code in 10 minutes and completes human validation in 20 minutes, allowing a single professional to fix up to 16 scripts per day. The cost per script dropped from $18 to $4—a 78% reduction.

e-Core

We combine global expertise with emerging technologies to help companies like yours create innovative digital products, modernize technology platforms, and improve efficiency in digital operations.